Subscribe

Sign in

LocalAPI.ai - Local AI Platform

Easily invoke and manage local AI models in your browser.

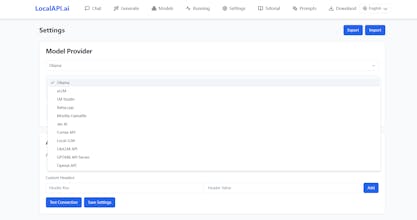

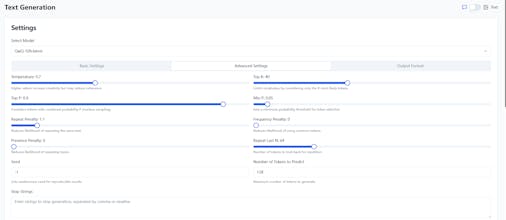

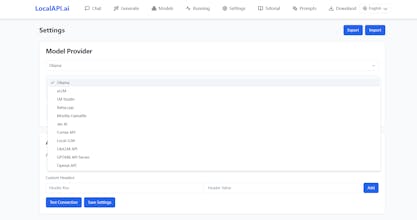

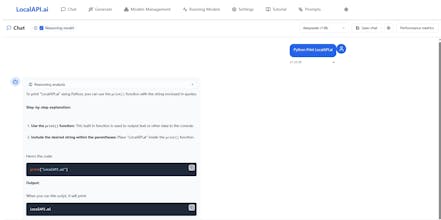

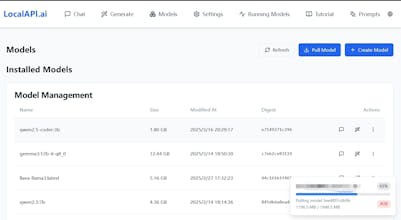

LocalAPI.AI is a local AI management tool designed for Ollama. It can run in the browser with just one HTML file and requires no complex setup. It is also compatible with vLLM, LM Studio, and llama.cpp.

Free

Meet the team

About this launch

LocalAPI.ai - Local AI Platform

Easily invoke and manage local AI models in your browser.

5

Points

1

Comments

#83

Day Rank

#483

Week Rank

LocalAPI.ai - Local AI Platform was hunted by in Developer Tools, Artificial Intelligence, GitHub. Made by . Launched on April 2nd, 2025. is not rated yet. This is LocalAPI.ai - Local AI Platform's first launch.