Subscribe

Sign in

The top dos and don'ts of building a product experience

Share On

Debrief: A Sprig x Product Hunt event about understanding the real reasons behind users' actions, in order to build products and features people truly need.

Yesterday, Sprig x Product Hunt hosted a webinar to explore the best practices and pitfalls to consider when crafting a stellar product experience.

Host Jamie Sprowl was joined by Sprig’s CEO Ryan Glasgow and Head of Product Ning Ma, who answered community questions before presenting their Top 5 Dos and Don’ts of Building a Product Experience.

A quick Intro to Sprig

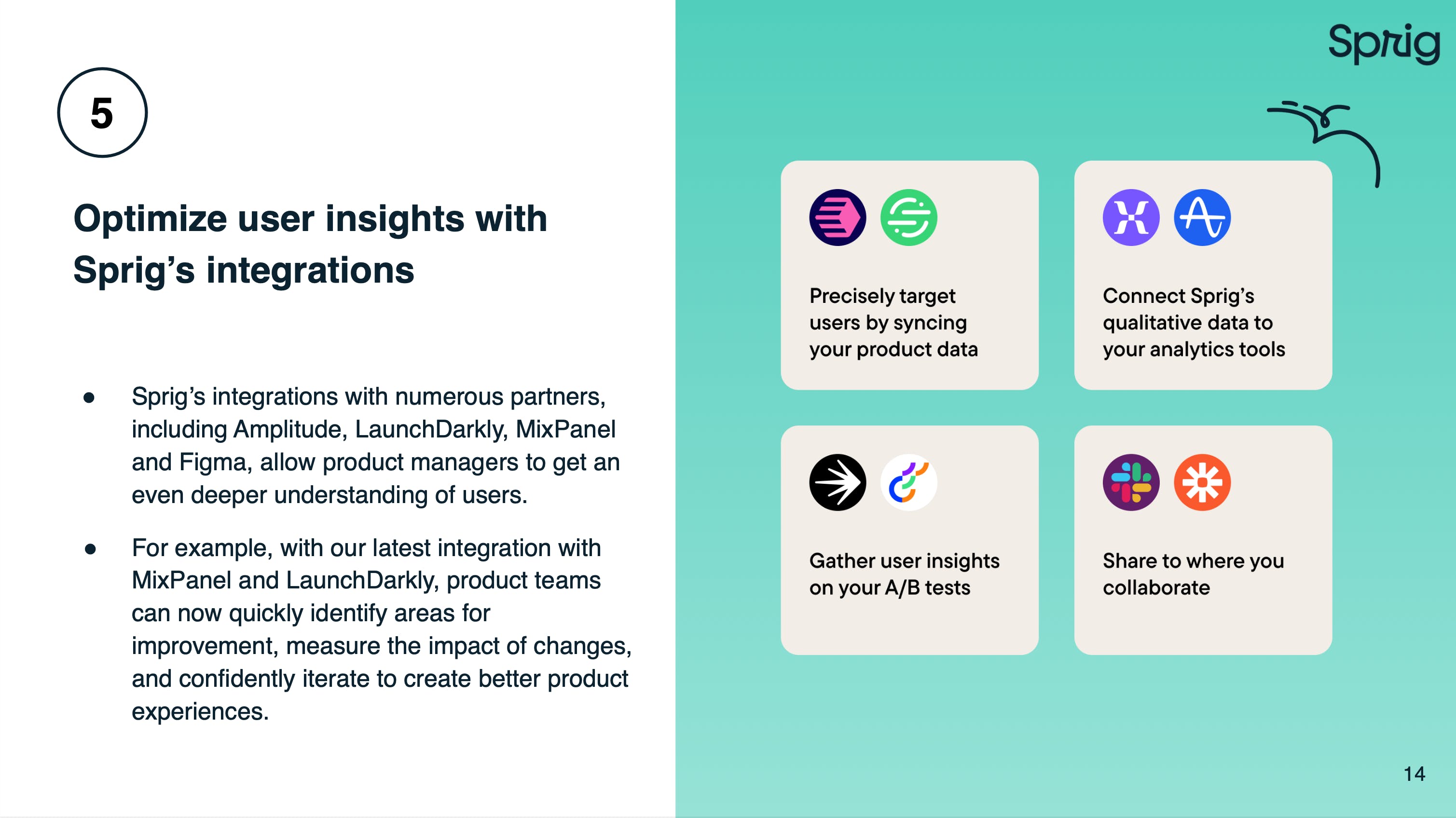

Sprig is a product experience insights platform that partners with analytics companies like Mixpanel and Amplitude. It focuses on understanding the "why" behind user behavior.

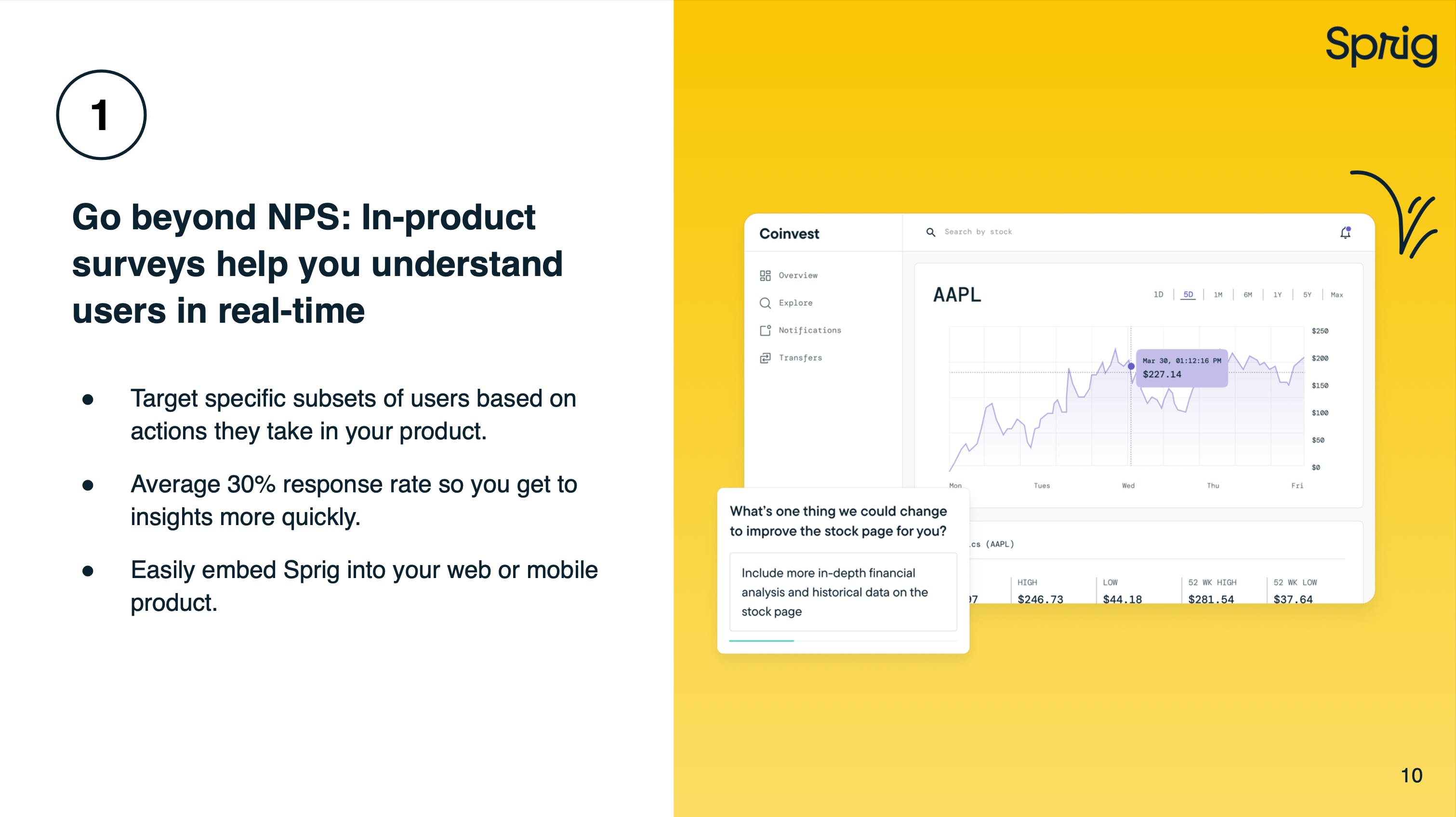

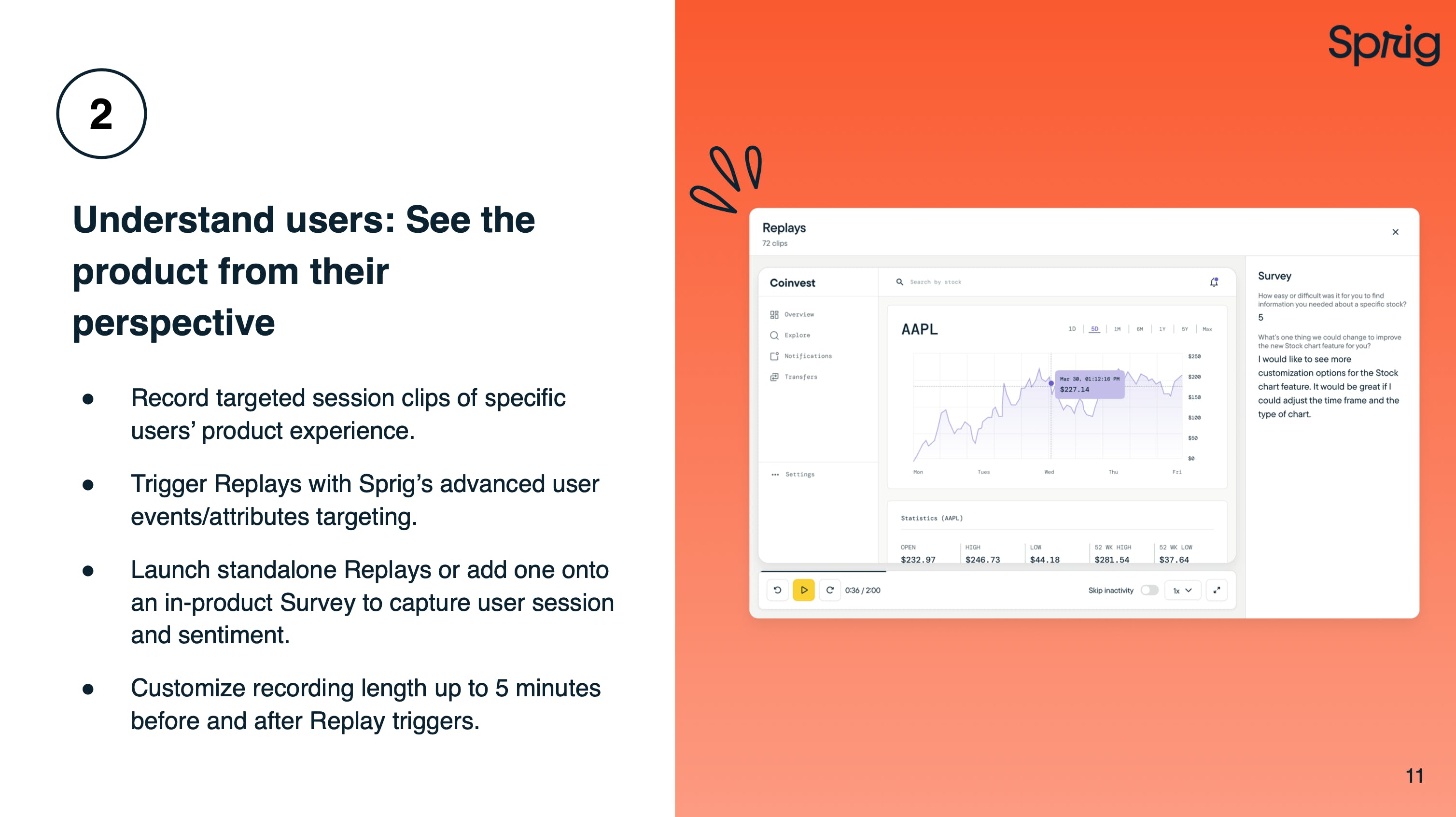

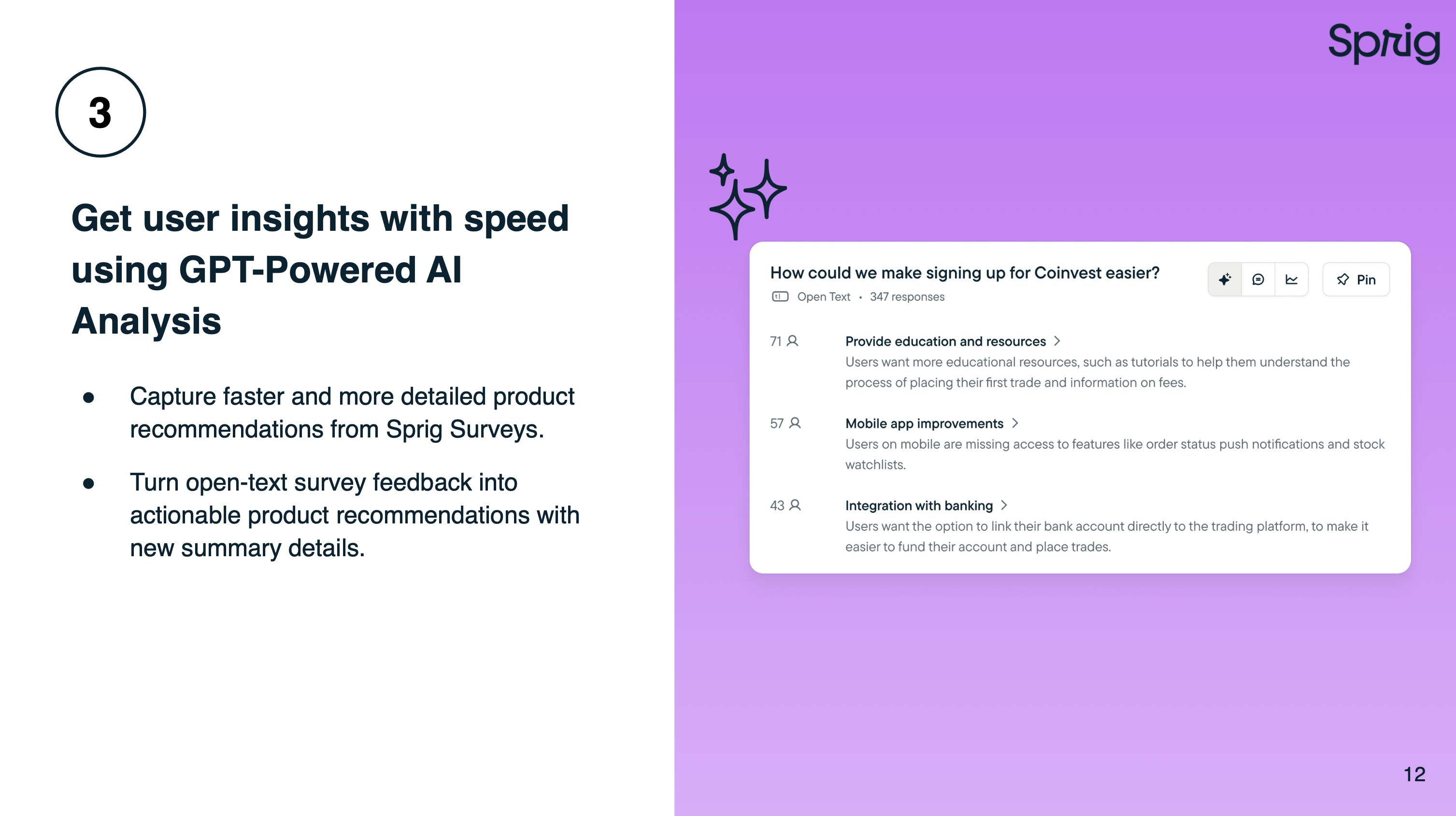

Sprig offers four main products: in-product surveys, session replays, prototype testing, and GPT for analyzing user insights, and their real USP is in the way those tools can be combined to save product teams hours on feature prioritization and insights-backed roadmapping.

On user insights, product roadmapping, and the many hats of a PM

Ning and Ryan kicked off answering audience questions around why user insights and building products based on user feedback are crucial PM considerations.

Why?

Because understanding the real reasons behind user actions by getting contextual in-product feedback helps form a roadmap that will help your team build products and features that users truly need.

When asked about how product managers can juggle timelines, engineering expectations, gathering insights, priortizing features, and all the rest, Ning and Ryan reflected on their past experiences at Weebly and Uber.

You can watch the full event on YouTube here.

Scroll for key takeaways, conversation highlights, and slides. 👇

Key takeways

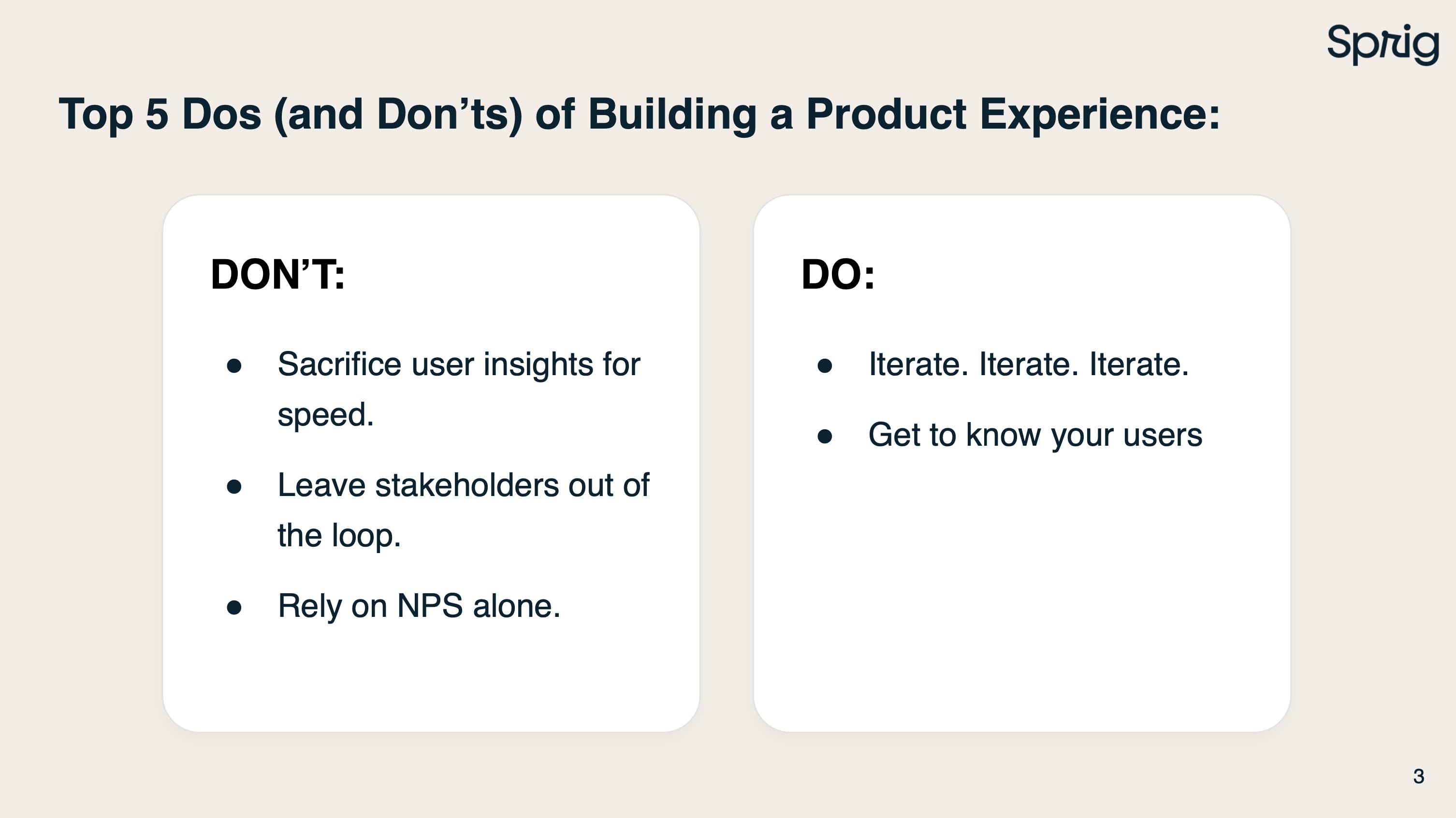

On the do's and don'ts of building a product experience

High-level, here are a few takeaways:

- Don't sacrifice user insights for speed. Find ways to get user insights and ship quickly.

- Don't leave stakeholders out of the loop. Make insights accessible to all stakeholders.

- Don't rely on NPS alone.

- Don’t chase shiny new features above focusing on continuous UX metrics and prioritizing core product experience improvements.

- Do rapidly iterate on your product experience. Launch features in small groups and quickly iterate based on user feedback.

- Do get to know your users. Understand the "why" behind user behavior and use tools like Sprig to gather fast user insights.

- Do set up continuous UX metrics and prioritize core product experience improvements.

- Do establish a customer advisory board or design partner program for continuous user insights.

- Do implement a process for rapid iteration on product experience based on user feedback.

Conversation highlights

Why do user insights matter in product roadmapping, and why should PMs care?

Ning Ma: So I've been in product management for about nine years now. Launched a lot of successful products and at the same time also, there are some that didn't work well.

Looking back, I’ve tried to understand what went well, what didn't go well, and why. And it’s really important that we build products based on user feedback.

One test that I have is that when we look at some kind of product analytics data, we always look at revenue information to see the end KPI of the product. But oftentimes what we're missing is the why behind user actions. I was really inspired by a product like Sprig, where you can actually go down to the why behind certain user interactions with your product.

Quite simply, the more that you can understand how users feel and the reasons behind why they are taking certain actions, the better you can form a roadmap that guarantees you are building products that are truly based on what users really want.

As a PM, there's a lot to juggle. Timelines, engineering expectations, etc. How have you, as PMs, managed that? All of these things, and still finding time to gather insights? Are there any specific examples that you have from either of your careers?

Ryan Glasgow: Before founding Sprig, I was a product manager at several different companies and one of them is Weebly. And I believe we’d just launched a new iOS app. It was in TechCrunch, all the major tech publications, we are getting, you know, 10s of 1000s of downloads.

But we knew that there were some opportunities to strengthen the product experience. We knew that there were some bugs and edge cases that we didn't quite catch in our QA. And I knew the CEO was constantly messaging me on Slack asking, you know, Hey, what's going on here? How are we gonna get this fixed?

I didn't have time to set up, you know, 2,000 calls and uncover all those edge cases. So, I launched a quick in-product survey, where and when I knew users were having issues, to ask what those issues were, and then I typed all of it into a spreadsheet. It turned out to be a really powerful way to quickly and instantly understand exactly what was working, but also where and how exactly that mobile app could be strengthened.

I looked at all the issues, sorted through all of them, prioritized all of them, gave them to engineering, and within just a matter of weeks, we saw all those edge cases and all those little issues cleaned up, and we were off to the races. It ended up being an award-winning product, winning award after award after that, but only once we were able to get to really listening to that user feedback and resolving those issues.

Ning Ma: I have two tips. One is collaboration. I used to work at Uber, which has much larger product teams. We had engineers, you know, product analysts, and data scientists.

At that time, I actually did a lot of collaboration with those teams to really kind of drill down to specific data, run specific experiments to get to the root cause of certain challenges that we were seeing from the product data site. That challenge, we solved by using replay toolings to really look at how people interact with the product.

But then the challenge became, while it's great that you can see how people truly interact with the product, it's really time consuming - because you have so many clips to watch.

So at Sprig, more recently, we’re solving that problem with the launch of Sprig’s Session Replays and AI Insights. Because they’re attached to the impacts of in-product surveys, you can easily find replays where, for example, people reported their experience wasn’t so great. You can also just filter and only watch those lists of replays, to really quickly drill down to specific insights.

I think that's a really interesting way to - while you’re still technically juggling a lot of “homework” - that’s an easier way to get to user insights and prioritize product experiences, in a much, much less time-consuming way.

📹 You can watch the full event here.

The slide deck from Sprig’s presentation

📹 View the the full event.

Comments (6)

tomm chris@tomm_chris

What sets Kinitopet apart is its focus on environmental storytelling. Every location in the game kinitopet is meticulously crafted, with rich lore and intricate details that breathe life into the world.

Share

Iteration is the key, thanks for sharing.

It provided a comprehensive guide on how to create a successful product experience, which I believe is invaluable for anyone in the field of product development. The article not only highlighted the essential "dos" that should be prioritized but also cautioned against common "don'ts" that can hinder the process.If you're interested in exploring fashion essays examples, I highly recommend checking out this https://www.notimeforstyle.com/top-33-best-fashion-essay-topics-and-ideas-for-2023/?lang=en article. They offer genuine and unbiased reviews that can provide additional insights and inspiration for your own projects.

It's great that you can understand how users feel https://surron-ebikes.com/ultra-...

More stories

Mathew Hardy · How To · 3 min read

How to Detect AI Content with Keystroke Tracking

Sanjana Friedman · Opinions · 9 min read

The Case for Supabase

Vaibhav Gupta · Opinions · 10 min read

3.5 Years, 12 Hard Pivots, Still Not Dead

Kyle Corbitt · How To · 5 min read

A Founder’s Guide to AI Fine-Tuning

Chris Bakke · How To · 6 min read

A Better Way to Get Your First 10 B2B Customers