What we've learned in 3 days of Llama 3

Share On

Kyle Corbitt, the founder of OpenPipe, shared his experience with Llama 3 on the Product Hunt blog. The bottom line? “You can serve a fine-tuned model that outperforms GPT-4 for 1/50th the cost.”

Hey everyone! You’ve probably heard that last Thursday Meta announced Llama 3, a new open-source LLM. There are 3 variants announced so far: a small 8B parameter model, medium 70B parameter model, and very large 405B parameter model. The 405B model is still in training but weights for both of the smaller sizes are available.

Good, Bad, or Ugly?

The Good

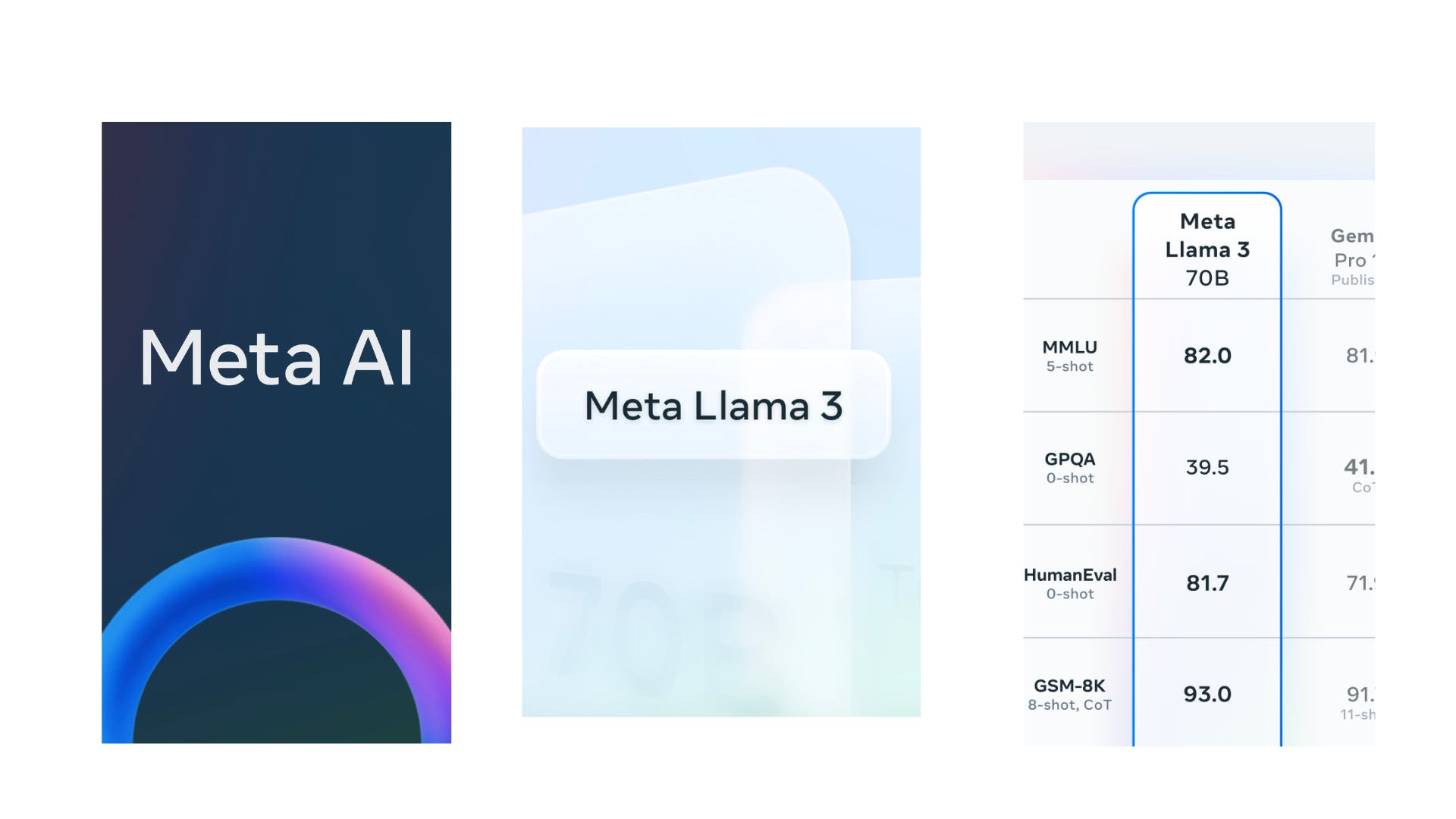

- The benchmarks are absolutely insane. The 8B model is almost as strong as Llama 2’s 70B variant and is much stronger than Mistral 7B, which has been the go-to small model for the last year. The Llama 3 70B model compares favorably to Gemini Pro 1.5 and Claude 3 Sonnet.

- Meta also released preliminary benchmarks for the 405B model, which compare favorably to GPT-4 Turbo. This model has also been confirmed to be multimodal (text+image input), differing from the smaller two which are text-only. Obviously we’ll have to wait and see what the released model looks like but this is really exciting news.

- In addition to the base models, Meta released instruct variants of both the 8B and 70B models. The instruction tuning was really well done and these are ready for use immediately. The 70B model is capable of all tasks that you’d have used GPT-3.5 for, and many tasks that previously required GPT-4.

The Bad

- The released models are capped at an 8K context window, which compares poorly to GPT-4 Turbo (128K), Claude 3 (200K), or even Mistral 7B (32K). That said, there are now good techniques to extend the context window with minimal additional training, so I expect longer-context variants will drop soon.

The Ugly

- We’ll probably have to wait a few more months for the largest model to drop, which could end up being the most impactful of the three.

Serving Llama 3 🍽️

You have many good options to serve Llama 3 models in production. Its architecture is nearly identical to Llama 2, so many of the Llama 2 inference providers already have Llama 3 support live. I’ve included a few providers that I’ve personally used and have confidence in for production workloads below. Note that these are the shared tenancy prices; many of these providers can also give you a dedicated deployment paid by the GPU-hour.

Fine-Tuning Llama 3 ✂🦙

At OpenPipe we’ve found that even models in the 7B weight class can often rival GPT-4 when fine-tuned on your specific task.

We released fine-tuning support for Llama 3 8B on launch day (70B coming soon!). We’ll have benchmarks soon, but in initial testing we’ve found that a fine-tuned Llama 3 8B performs similarly to fine-tuned Mixtral 8x7B, while being much cheaper to serve. And, we had previously found that for most tasks Mixtral is able to outperform GPT-4 when fine-tuned on a high-quality dataset.

This means that for many tasks, you can now serve a fine-tuned model that outperforms GPT-4 for 1/50th the cost. This is of course a huge deal for many business models! So (here comes the pitch): if you’d like to try fine-tuning a Llama 3 variant on your own prompts and completions feel free to create an account at openpipe.ai — it only takes a couple minutes to get started!

About the author

Kyle Corbitt is the founder of OpenPipe, the easiest way to train and deploy your own fine-tuned models. Formerly, Kyle was a director at Y Combinator, engineer at Google, and co-founder at Emberall.

This article was originally published on OpenPipe's blog.

Subscribe to our Developer Tools newsletter, WeirdWideWeb, to get dev tools and build stories in your inbox once a week.

Comments (4)

Rowe Morehouse@rowemore

Sales Likelihood Calculator

Great Article, @corbtt ! .. Bad News for OpenAI -- A free LLM that's pretty much as good as GPT-4.

Bad news for Gemini? Kind of.

The really killer feature of Gemni is not necessarily the generative writing features from a blank-screen starting page, but the Google Workspace integrations, where Gemini can pull data from your Gmail Inbox and create a Google Sheet, for example, or "help you write" **in context**, inside your doc or email draft.

Share

I bookmarked this blog to check for fresh posts. Keep it up, you're awesome! This is an excellent motivational article. Keep it up. To relax and have a good time, try playing fireboy and watergirl.

After three days with Llama 3, I’m impressed by its advanced capabilities and intuitive design. The improvements in performance and id card ribbon supplies user interaction are noticeable, making it a powerful tool for various applications. This short period has already highlighted its potential to enhance productivity and streamline complex tasks efficiently.

Muitas pessoas optam por aplicativos especializados porque oferecem uma experiência mais rápida e personalizada. Com eles, o acesso é direto e a navegação é mais fluida. Além disso, esses aplicativos costumam ser otimizados para dispositivos móveis, melhorando o desempenho. Para quem quer explorar essas vantagens, este https://melbetbr.app/casino/ oferece uma ótima opção de aplicativo.

More stories

Kyle Corbitt · How To · 5 min read

A Founder’s Guide to AI Fine-Tuning

Chris Bakke · How To · 6 min read

A Better Way to Get Your First 10 B2B Customers

Keegan Walden · Makers · 7 min read

The inner work of startup building

Calvin Chen · Makers · 7 min read

Suffering = Growth